Wednesday MAMLMs: Notes on Zuckerberg’s "PASI" Pivot: From Adtech Moats to "Superintelligence" Moonshot

Killing FAIR, chasing “Personal Artificial Super-Intelligence”: the fast‑second strategy is dead; “PASI” reframes AI as product platform, not plumbing'; Scale AI, TBD Lab, and nine‑figure hires signal control over capability. LeCun saw LLMs as super-steroid-boosted “Clever Hans”; Zuckerberg responded by sidelining dissidents and tripling down on LLMs anyway. And so Zuckerberg has ditched FAIR’s long‑horizon research, concentrating power, budget, and speed, and YOLO‑spending to escape supplier status under Apple and Google. Can PASI deliver products before capex crushes credibility?…

FaceBook’s old MAMLM strategy before last spring had made a lot of sense to me:

MAMLMs—Modern Advanced Machine-Learning Models—promise to be of enormous value as natural‑language front-end interfaces to structured and unstructured databases.

Their value is obvious: they turn messy human intent into structured actions—summarizing 200‑page briefs, drafting RFPs with compliance constraints, translating domain jargon across teams, and even orchestrating software tools via text.

But they are hideously expensive to build and run at the frontier. Frontier costs bite hard. Training a top‑tier model still demands tens of thousands of GPUs, months of wall‑clock time, elite engineering talent, and multi‑million‑dollar electricity and opportunity costs; serving it adds a second bill in inference latency, memory bandwidth, and GPU-hours. Currently, value capture depends on architecture choices—distillation into smaller specialists, retrieval‑augmented generation to reduce hallucinations and compute, tool use to externalize calculation, batching and caching to amortize tokens—and on matching capability to context.

As we as a species explore this space, an awful lot of money will be wasted from the point-of-view of private business profitability, perhaps useful to society as a whole from the knowledge it generates from exploring the space of technology and business-model profitabilities, but definitely falling into the “engineers” category of what the Rothschilds of the 1800s thought was the most effective way to lose fortunes quickly. And this is supercharged by the current bubble-enthusiasm, likely as it is to produce your standard bubble-style overbuilding-and-shakeout at a potential scale of trillions of dollars.

Hence, for FaceBook, there is a single key priority: it is the defense of their current platform‑monopoly profits against disruptive entrants and architectures—through preemptive acquisitions, technical foreclosure, regulatory gaming, and other methods that rhyme with the historical playbooks of AT&T’s long‑distance choke points and Microsoft’s 1990s browser tie‑ins. Thus the clear strategy was to spend on “AI” enough and in ways that neutralized the possibility of disruption by protecting newly revealed attack surfaces, while staying ready to be a very fast second in deployment where truly useful capabilities for additional audience capture emerged.

There was one area, however, in which it did make sense for FaceBook to try to be first in development and deployment of MAMLMs: ad targeting. The platform’s business model hinged on extracting weak but numerous signals from messy, high‑dimensional user behavior—clicks, dwell time, social graph proximity, image content, and text sentiment—and turning them into real‑time predictions of purchase intent, lift, and conversion probability. Multimodal attention models could fuse a product photo, a caption, and the user’s recent interactions to make decisions. They could also do creative optimization by testing micro‑variants of copy and imagery, and run budget allocation across campaigns with rapid feedback loops. After Apple’s App Tracking Transparency disrupted cross‑app identifiers, first‑party, on‑platform inference grew even more valuable to reconstruct targeting cohorts without external trackers. For FaceBook, being first and strongest in ad-targeting MAMLMs promised a compound edge.

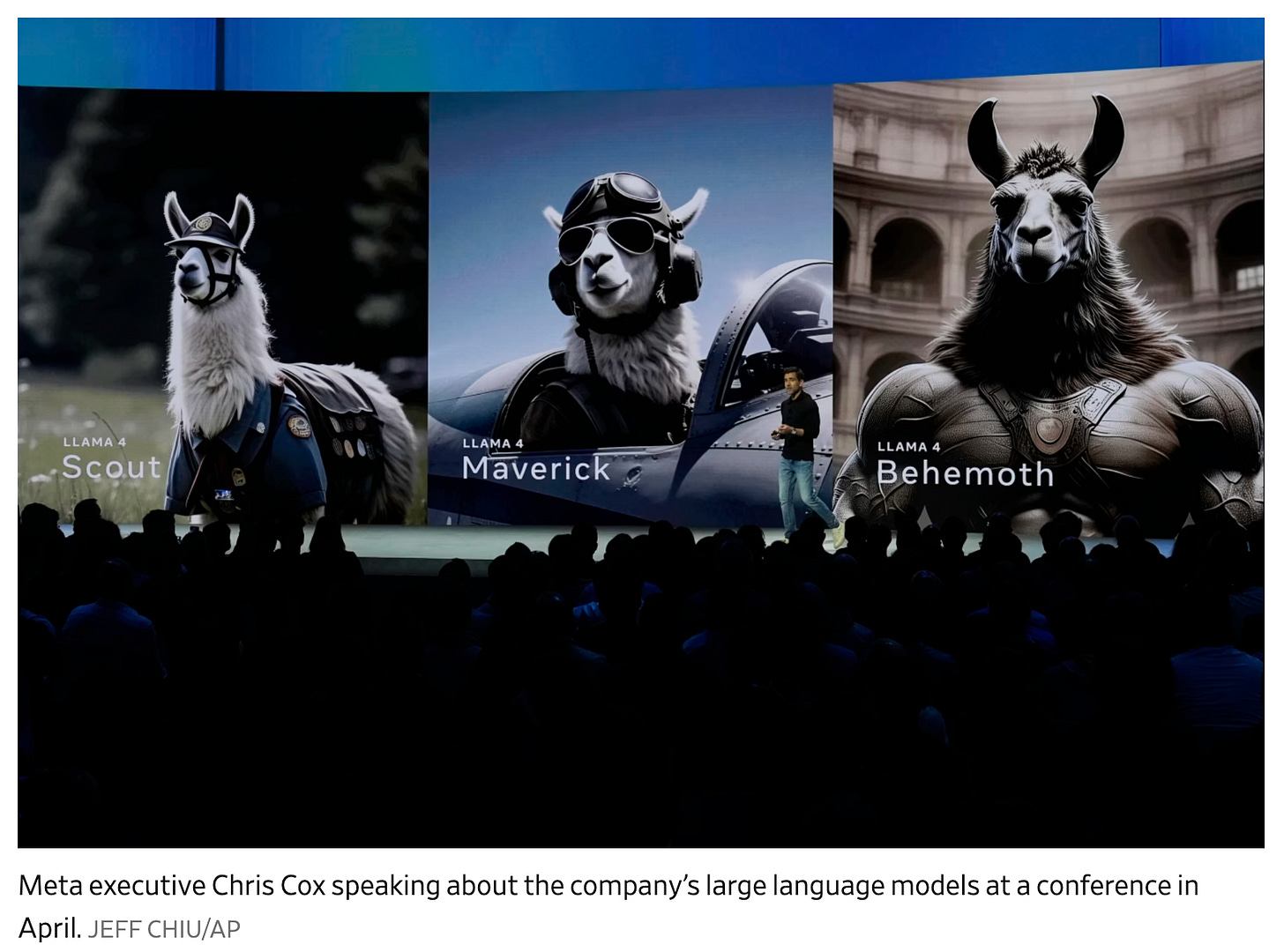

This is a long-winded way of saying that, to me, all the pieces of FaceBook’s old “AI” strategy semed to me to make sense: focus AI-spending on ad targeting; open-source LLaMA to deprive those wanting to make money by selling foundation-model services of their oxygen and their ability to accumulate a warchest to make a run at disruption, and otherwise stand ready to implement GPT LLM MAMLMs when valuable use-cases arose, while spreading the true R&D budget further around so that FaceBook would not get caught flat-footed when the next post-GPT MAMLM thing emerged.

And, of course, all of thus was reinforced by Chief Scientist Yan LeCun’s suspicion of GPT LLM “AI”. It was, in his estimation, better thought of as autocomplete and “Clever Hans” on super-steroids; rather than a road to AGI or beyond that to ASI. My belief is that Yan LeCun was and is correct here. GPT LLM MAMLM systems excel at next-token prediction in vast text corpora but lack grounded models of the world, persistent goals, and mechanisms for causal reasoning, for the same architecture that stitches together plausible sentences often has no way to validate their truth. LeCun’s own research agenda—energy-based models, joint training of perception, memory, and action, and world models that can predict consequences—aims at enabling agency and reasoning beyond pattern matching. Empirically, we’ve seen LLMs. even with bolted-on tools that mitigate weakness—retrieval augmentation, chain-of-thought, tool use, external memory, or hybrid neuro-symbolic pipelines—fail. They are scaffolds around a predictive core rather than evidence of emergent general intelligence.

If AGI implies autonomous goal-directed behavior with reliable generalization across modalities and environments, then LeCun’s caution is well taken: today’s GPTs are powerful communicators and reasoning simulators, but without grounded learning they remain brittle pattern machines. Very useful for literature-search and summarization, yes. Producing a leveling-up in that, properly prompted, they can get your prose to the level of the typical internet s***poster with relative ease. Extraordinarily useful as natural-language front-ends to properly curated datastores, structured and unstructured, most impressively as programming pilots, yes. But something to spend nine figures a year on, very doubtful.

That was FaceBook’s strategy. It seemed to me to be, well, wise.

However, then, last spring, all of a sudden everything at FaceBook changed. Let me give the mic to the very sharp Paul Kedrosky:

Paul Kedrosky: Meta: Purging of Dissidents vs Open Rebellion <https://paulkedrosky.com/metas-purging-of-dissdents/>: ‘Part purging of LLM dissidents, part philosophical break…. Mark Zuckerberg has reoriented Meta’s AI efforts around “superintelligence,” led by Alexandr Wang of Scale AI.LeCun’s long-term research lab has been gutted and folded into product groups….LeCun… has consistently argued that LLMs are a clever trick, but a dead end on the path to higher levels of intelligence…. [Zuckerberg] has tripled down on LLMs both in terms of hiring and capex. LeCun’s view is that intelligence emerges from interacting with the physical world…. This marks the last vestige of major non-LLM research disappearing from inside the hyperscalers. It is also, and more importantly, the first open act of rebellion from a founding deep-learning figure against the LLM orthodoxy dominating Silicon Valley…

Referencing:

Melissa Heikkilä & Hannah Murphy, Stephen Morris & George Hammond: Meta chief AI scientist Yann LeCun plans to exit and launch own start-up <https://www.ft.com/content/c586eb77-a16e-4363-ab0b-e877898b70de?emailId=dd4d1c03-8c5e-4163-8425-5996b93e2317&segmentId=ce31c7f5-c2de-09db-abdc-f2fd624da608>: ‘Turing Award winner seeks to depart as Mark Zuckerberg makes ‘superintelligence’ push…. Zuckerberg hired Alexandr Wang… [a] “superintelligence” team… paying $14.3bn to hire the 28-year-old…. Zuckerberg also personally handpicked an exclusive team, called TBD Lab, to propel development… luring staff from rivals such as OpenAI and Google with $100mn pay packages…. Zuckerberg has come under growing pressure from Wall Street to show that his multibillion-dollar investment in becoming an “AI leader” will pay off and boost revenue. Meta’s shares plunged 12.6 per cent — wiping out almost $240bn from its valuation — in late October after the chief executive signalled higher AI spending ahead, which could top $100bn next year…

FaceBook’s AI vision had been that of its FAIR—Facebook Artificial Intelligence Research. It was created in 2013 to push long‑horizon, open research in areas like self‑supervised learning, computer vision, NLP, robotics, and tooling such as PyTorch, with labs across Menlo Park, New York, London, Paris, and more. Over time, FAIR’s role shifted as Meta prioritized productized generative AI; key leaders departed and work on models like LLaMAmoved under product teams, while FAIR refocused on longer‑term “advanced machine intelligence.”

The governance gamble is clear: less basic research, more integrated shipping. The pivot centralizes technical and budget authority under a small cadre, echoing historical chokepoint strategies to deter disruption. FaceBook’s talent strategy—nine‑figure packages and direct CEO recruiting—trades internal continuity for lateral influx. Roughly 600 research roles cut while rehiring into TBD suggests a barbell org: fewer theorists, more load‑bearing product/model owners. The TBD Lab “SWAT team” concentrates decision rights, reduces internal transaction costs, and shortens model iteration cycles. The $14.3bn Scale AI deal plus 49% stake created a ready‑made leadership and evaluation apparatus for model quality, accelerating the ramp without a full acquisition.

But when the technical paradigm shifts away from LLMs, FaceBook will have to pivot again without FAIR’s bench.

Strategically, ad-targeting remains the revenue flywheel. A stronger model stack can lift ad load and price while AI products deepen engagement. Even with aggressive build‑out, Meta’s cash generation means that the downside is valuation, not solvency. Near‑term revenue cushions come from ad load and pricing uplift driven by AI. The context, of course is insane ballooning sector capex: reports of multi‑year US $600bn AI infra plans underscore the wager on power, land, chips, and water becoming strategic inputs. FaceBook telegraphed “notably more than” $100bn in 2026‑style spend equivalents, triggering a sharp drawdown. Investors may balk at YOLO capital intensity absent clear product pull‑through, demanding product proofs, not just rhetoric about ASI glory.

But is all of this a good idea—for the future of humanity, for the health of the tech sector as a leading sector, for Mark Zuckerberg’s personal status, for the returns on investors in FaceBook?

The new buzzphrase now is “personal artificial superintelligence”: PASI. Medium‑term returns depend on consumer adoption of PASI, whatever that may be. We see the function of the buzzphrase in the wheeling of FaceBook as an organization in a new direction: Reframing “AI” as a consumer platform, not a back‑office utility. Aligning FaceBook’s moats protecting its platform-monopoly profits with context-rich devices like glasses and phones. Abandoning the prior “fast‑second” posture and accepting higher execution risk to avoid platform disruption by rivals. And speed and coordination trump open science.

The technology-use case bet may be that owning the user interface and on‑device context will matter more than being the best general model, echoing past social‑graph advantages.

Or it may not. It may be a more cynical move by Zuckerberg—that to maintain his status in Silicon Valley, FaceBook needs to be “the best” rather than a mere supplier driving the flow of the real big profits in phones and OSes to Apple and Google; that he will spend his investors’ money like water to make that so; and that part of making that so is promising top-AI talent that they will not be working on ad-targeting and customer-relations ChatBots but on nebulous “ASI”.