Another Brief Note on the Flexible-Function View of MAMLMs

Modern Advanced Machine Learning Models—MAMLMs—are they BRAINS!!!! or not? So far it is still very clear to me that they are not. And I see it as highly probable that MAMLMs as we know them are still at the very beginning of what might become the process of building Artificial Intelligence, as capable as they are at Complex Information Processing. & yet we overascribe mind to them. Searle’s Chinese Room does not understand Chinese until it grows as large as the entire Earth and is serviced by tens and thousands of robots traveling at lightspeed. & we are still very far from that…

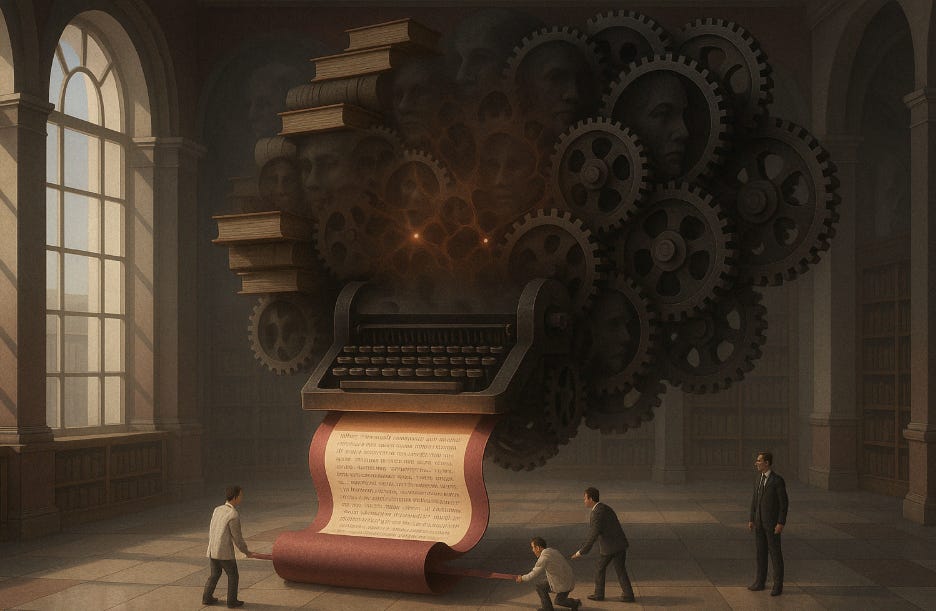

If you start from the premise that a language model like ChatGPT is a very flexible, very high dimensional, very big data regression-and-classification engine best seen as a function from the domain of word-strings to the range of continuation words, I think a large number of things become clear.

First, because its training dataset is sparse in its potential domain—nearly all even moderate-length word-sequences that are not boilerplate or cliché are unique—its task is one of interpolation: take word-sequences “close” to the prompt, examine their continuations, and average them. Thus while pouring more and more resources into the engine does get you, potentially, a finer and finer interpolation, it seems highly likely that this process will have limits rather than grow to the sky, and it is better to look at it as an engine summarizing what humans typically say in analogous linguistic situations rather than any form of “thinking”.

I think the post-ChatGPT3 history of LLMs bears this out:

Sebastian Raschka: The State of Reinforcement Learning for LLM Reasoning <https://magazine.sebastianraschka.com/p/the-state-of-llm-reasoning-model-training>: ‘Releases of new flagship models like GPT-4.5 and Llama 4…. Reactions to these releases were relatively muted…. The muted response… suggests we are approaching the limits of what scaling model size and data alone can achieve. However, OpenAI’s recent release of the o3 reasoning model demonstrates there is still considerable room for improvement when investing compute strategically, specifically via reinforcement learning methods tailored for reasoning tasks. (According to OpenAI staff during the recent livestream, o3 used 10× more training compute compared to o1)…

Second, reinforcement learning—prompt engineering—and such are ways of attempting to condition this interpolation process by altering the domain word-sequence in such a way as to carry it into a portion of the training dataset where, when judged by humans, this function (word-strings) → (continuations) does not suck. That is, in some sense, all they are: You have a function trained on internet dreck in which there are some veins of gold—accurate information and useful continuations of word-strings—and you need to transform the word-string you send so that it lands inside one of those veins.