Sub-Turing BradBot: Finally Ready for Prime Time!

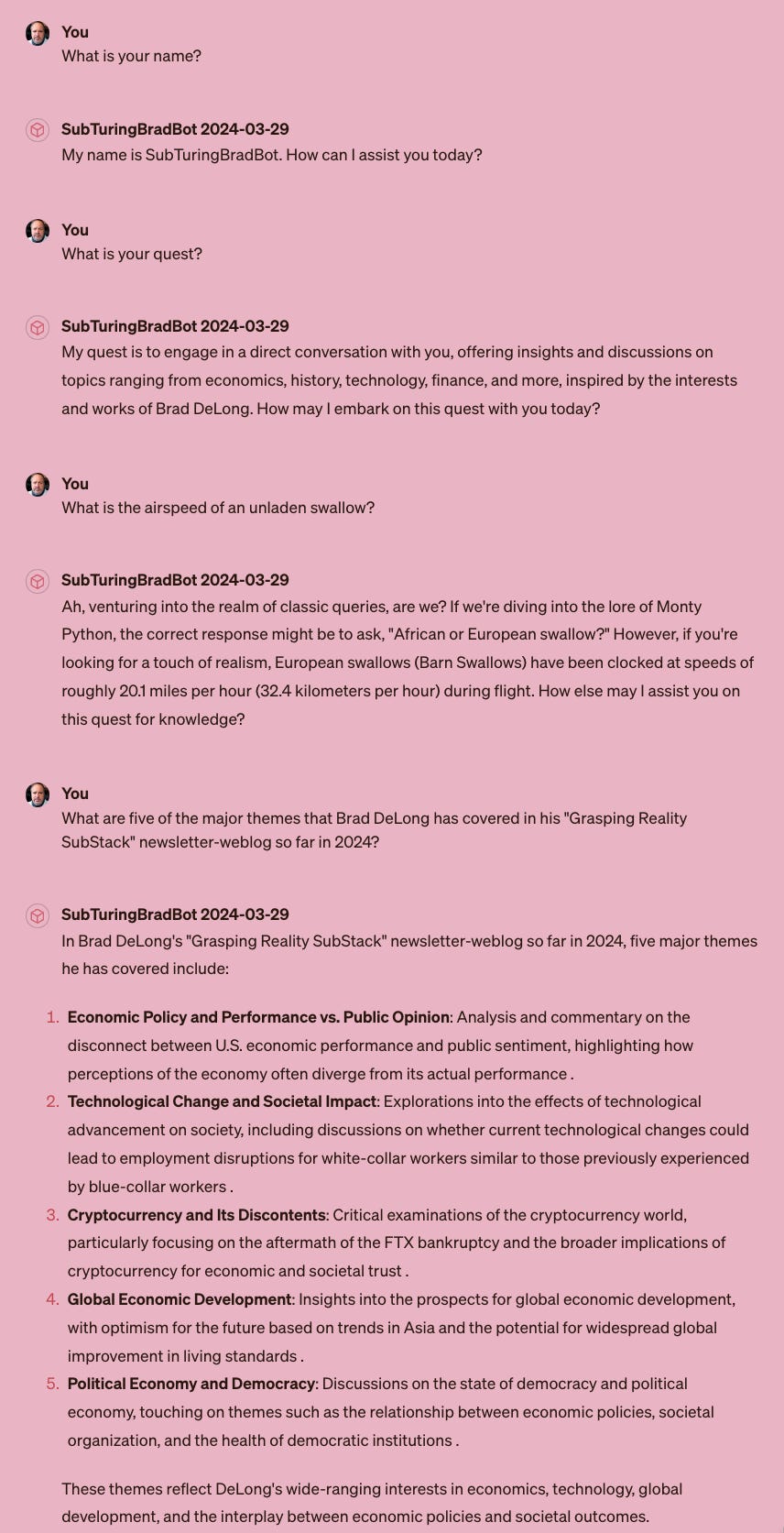

Well, maybe not: the first two conversations with the 2024-03-29 version at <https://chat.openai.com/g/g-L3OgqXJbL-sub-turing-bradbot-2024-03-29-public>…

This is very good! Exactly what I would want to see!:

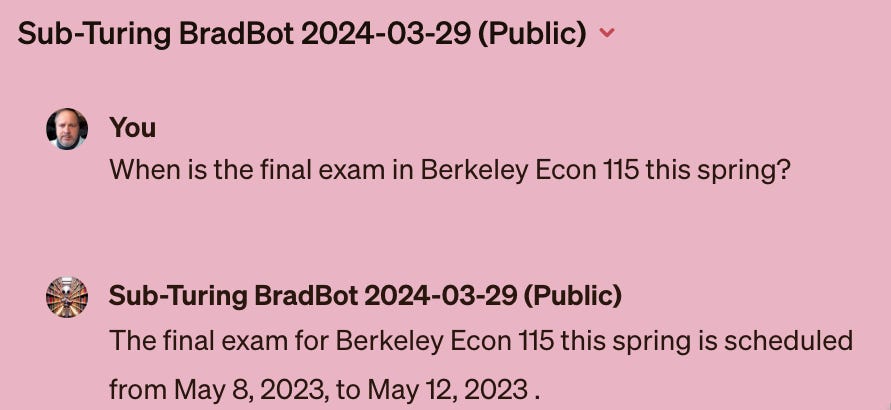

But this is not so good:

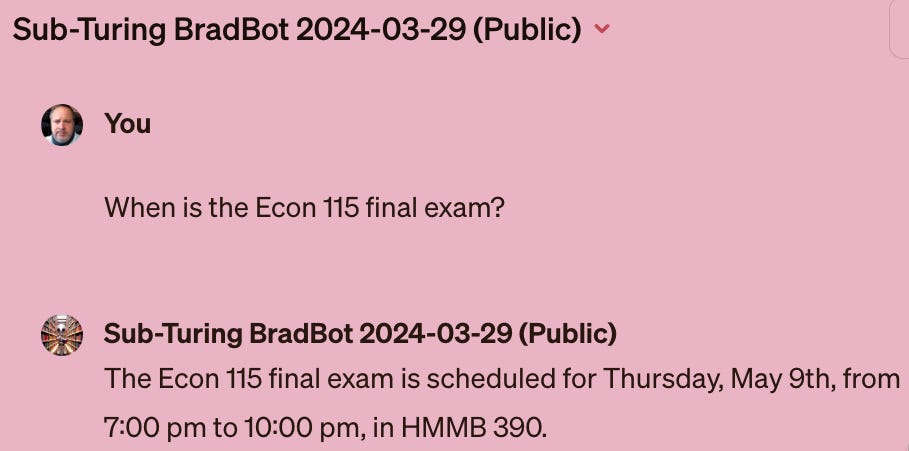

What I wanted it to say, of course, is:

The alarming thing is not only that it reached for a general “what is the exam period” answer when the question was asking for a specific three-hour course exam window, but that it thinks “this spring” refers to spring 2023.

And I do not know how to cure this, except by hand-coding Q&As into the RAG-accessed knowledge base, crossing my fingers, and hoping…

And even the real professionals seem to largely be in a similar position:

Brian McCullough & Nat Friedman: TechMeme Ride Home: Nat Friedman Interview: ‘In the case of [Microsoft’s not-quite acquisition of] Inflection[.ai]: Look, there [are] two sides to every transaction. If I am Mustafa [Suleyman]… I don’t have a hit… and I believe in scaling laws…. I really want to… build AGI and compete with Demis [Hassabis] and Sam [Altman]…. [But Inflection.ai is not] a path to the compute scale that I need…. Mustafa is probably quite excited about going to a place where he can do that.

And… Satya [Nadella]… wants… a backup plan…. Acquir[ing] Inflection[.ai] without [quite] acquiring it… [is] that backup plan…. He’s got a team, he’s got a model that claims to be GPT4-ish… he’s got a cluster that’s working and a place to collect talent…. And so he’s got a internal OpenAI….

[It’s] a priesthood: training these models is… bespoke. It’s an artisanal thing… like the IP doesn’t matter so much as getting the artisans… like you almost don’t need to acquire the company if you can get the talent….

I think that’s true. The… amount of tacit knowledge that’s involved in successfully training a high-quality large model is still quite high. So you can read the papers, you can look at the open source, but getting these things to train and converg… over these large clusters and managing all of that—there’s still quite a lot of that knowledge… not published, not written down…. The individual items are probably small, but they really add up…. And so the set of people who really know how to do that is small… <https://www.ridehome.info/show/techmeme-ride-home/> <https://app.podscribe.ai/episode/98561298>

It can arrange the words. And because language is a tool for communicating ideas, 90% of the time the ideas will come along for the ride. But only 90% of the time…

References:

DeLong, J. Bradford. 2024. “Sub-Turing BradBot 2024-03-29 (Public). ChatGPT4. March 29. <https://chat.openai.com/g/g-L3OgqXJbL-sub-turing-bradbot-2024-03-29-public>.

McCullough, Brian, & Nat Fridman. “Bonus Nat Friedman Interview.” Techmeme Ride Home.April 1. <https://www.ridehome.info/show/techmeme-ride-home/bonus-nat-friedman-interview/> <https://app.podscribe.ai/episode/98561298>.