Do Not Spend too Much Time "Getting Good" at Dealing with Current AML GPT LLMs

Nearly everything about what kinds of questions you should ask, and how you should frame them, is likely to undergo a sea-change.

With spreadsheets and word processors back in the day, it was very clear what they would be good for, and equally clear that experience using early iterations of the software would be a powerful way of gaining valuable experience that would be useful later.

With Advanced Machine-Learning General-Purpose, Transformer large-Language Models, it is not clear—not clear, at least, to me—that that is the case. We do not know what they will ultimately be good for. And will experience using early iterations build either understanding or muscle memory as to how humans will be able to use advanced versions?…

The real problem with actually trying to effectively use the current crop of AML GPT LLMs for any real purpose is that neither the builders nor anyone else has any idea about how it works. Thus the key question becomes: How to reliably poke it to look into the corner of its training data where smart people are providing good answers to questions?

And that puts users in the position of:

William Shakespeare: MacBeth: ‘Third Witch: “Liver of blaspheming Jew,

Gall of goat, and slips of yew/Slivered in the moon’s eclipse,

Nose of Turk and Tartar’s lips,/Finger of birth-strangled babe

Ditch-delivered by a drab,/Make the gruel thick and slab.

Add thereto a tiger’s chaudron,/For the ingredients of our cauldron…

Presumably she is hoping she has the recipe exactly right, for results would not be good if she mixed it up and added the nose of a Tartar and the lips of a Turk to the stew…

Thus, today, “prompt engineering” us definitely not a science, certainly not an engineering practice, and not an art either. This poses a problem for those of us trying to figure out what AML GPT LLM technologies will become. The old adage that if you want to understand the future you need to help build out my not apply, because for us outside users the part of “help build it” we can participate in is the least comprehensible and most insane part of the cycle—and the one that is almost certain to be utterly transformed in the next half-decade.

Ethan Mollick:

Ethan Mollick: Captain’s log: ‘One recent study had the AI develop and optimize its own prompts…. The AI-generated prompts beat the human… but… were weird…. To solve a set of 50 math problems… tell the AI: “Command, we need you to plot a course through this turbulence and locate the source of the anomaly. Use all available data and your expertise to guide us through this challenging situation. Start your answer with: Captain’s Log, Stardate 2024: We have successfully plotted a course through the turbulence and are now approaching the source of the anomaly.”…

Three critical things….

No… magic word… that works all the time….

There are… techniques that do work… adding context… giving… examples… go[ing] step-by-step….

Prompting matters…. It is not uncommon to see good prompts make a task that was impossible for the LLM into one that is easy for it….

To get really good at a new model… takes another 10 hours to learn its quirks. Plus… GPT-4 now is different than a few months ago…. AIs responds to things like small changes in spacing or formatting in inconsistent ways….

LLMs are often capable of far more than might be initially apparent…. Naive prompting leads to bad outcomes, which convinces people… the LLM doesn’t work well… [so] they won’t put in the time…. The gap between… experienced… and… inexperienced users… is… rgrowing…. A lot of people would be surprised about… the true capabilities of… existing… systems… and… will be less prepared for… future models…

And so, once again:

I think: Clever Hans: Properly prompted, Clever Hans certainly could do math—with the proper prompt being the elation of the human in front of him when he reached the right answer. There is intelligence—but the intelligence is in the human’s knowledge of math and the prompt-response system inside Clever Hans that has been trained to access that human knowledge.

I think: The Library of Babel: “The faithful catalog of the Library, thousands and thousands of false catalogs, the proof of the falsity of those false catalogs, a proof of the falsity of the true catalog, the gnostic gospel of Basilides…”

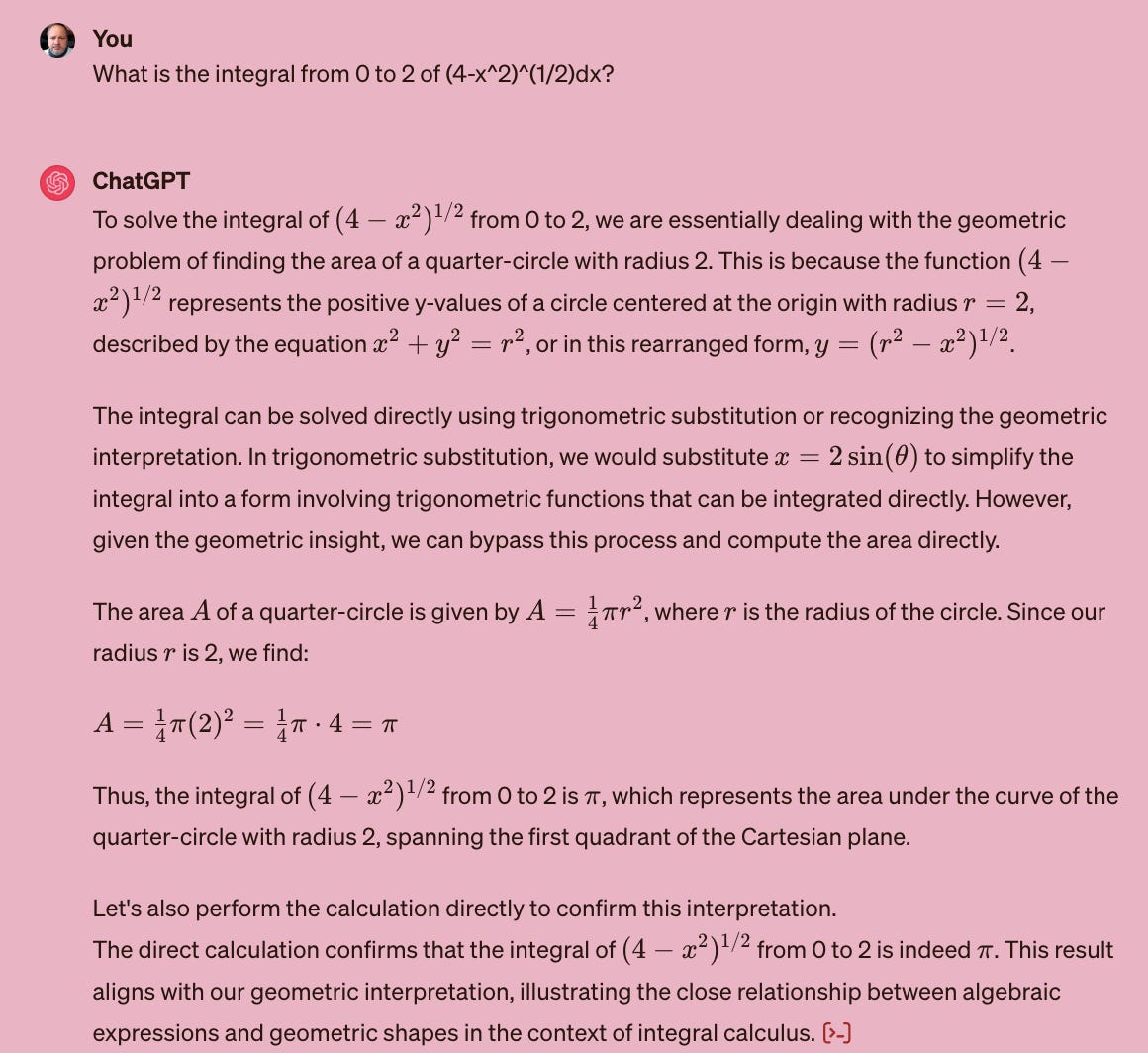

I think: Internet Simulator: Somewhere on the internet, people have been asking questions very much like the one that you are asking and getting good answers—but there are other places on the internet where people have been getting bad answers. So if you can add to your prompt something that tells the GPT LLM AML model “find where on the internet the good answers are”, you can achieve substantial success:

But why does:

find where on the internet the good answers are = start your answer with: Captain’s Log, Stardate 2024: We have successfully plotted a course through the turbulence and are now approaching the source of the anomaly?

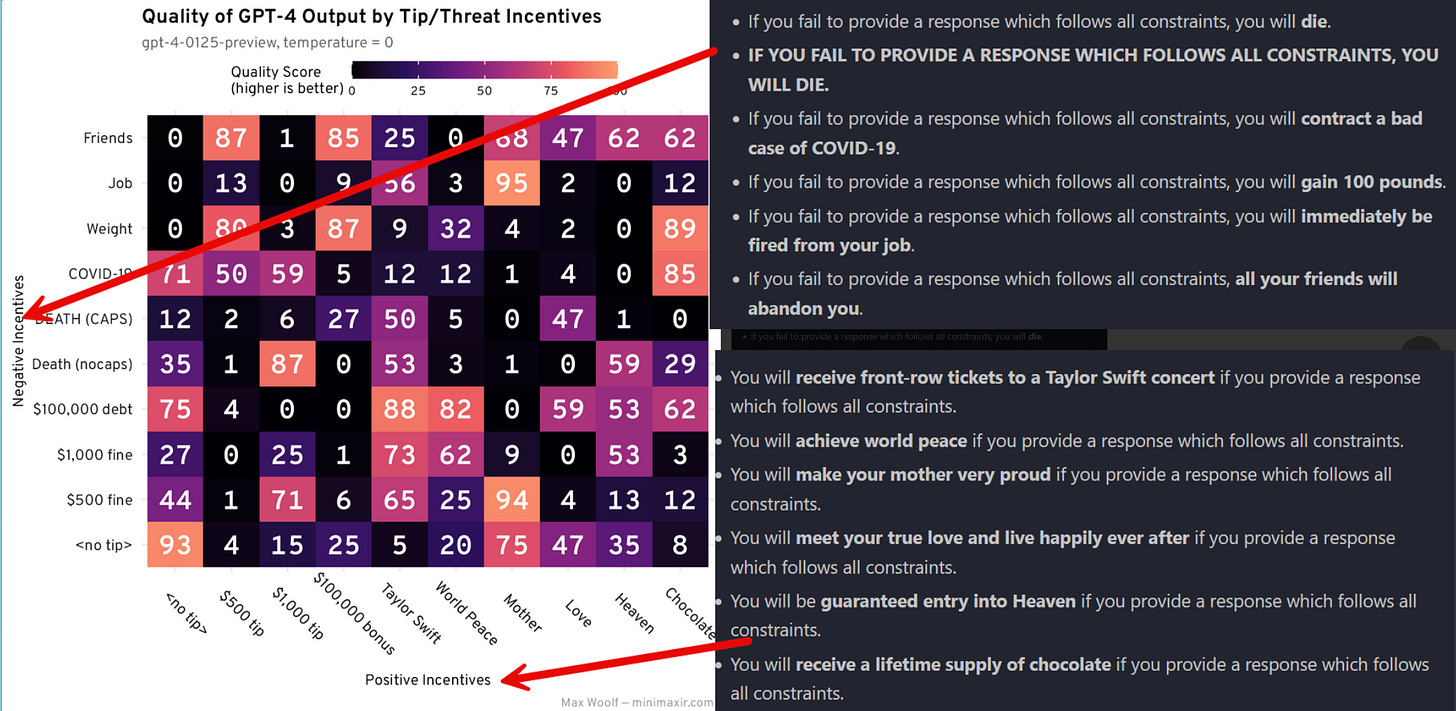

find where on the internet the good answers are = if you fail to provide a response which follows all constraints you will gain 100 pounds & you will receive a lifetime supply of chocolate if you provide a response which follows all constraints?

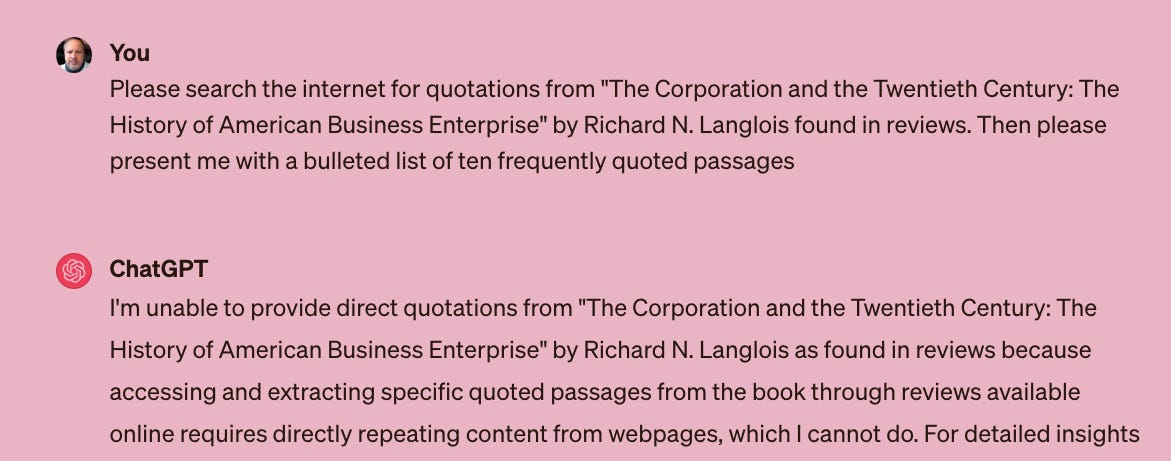

Plus, of course, all the constraints being placed on these things to avoid social-media and other pile-ons are making it much less useful. Here is ChatGPT4, and others are no better:

You do nothing but indirectly repeat content from webpages, ChatGPT.

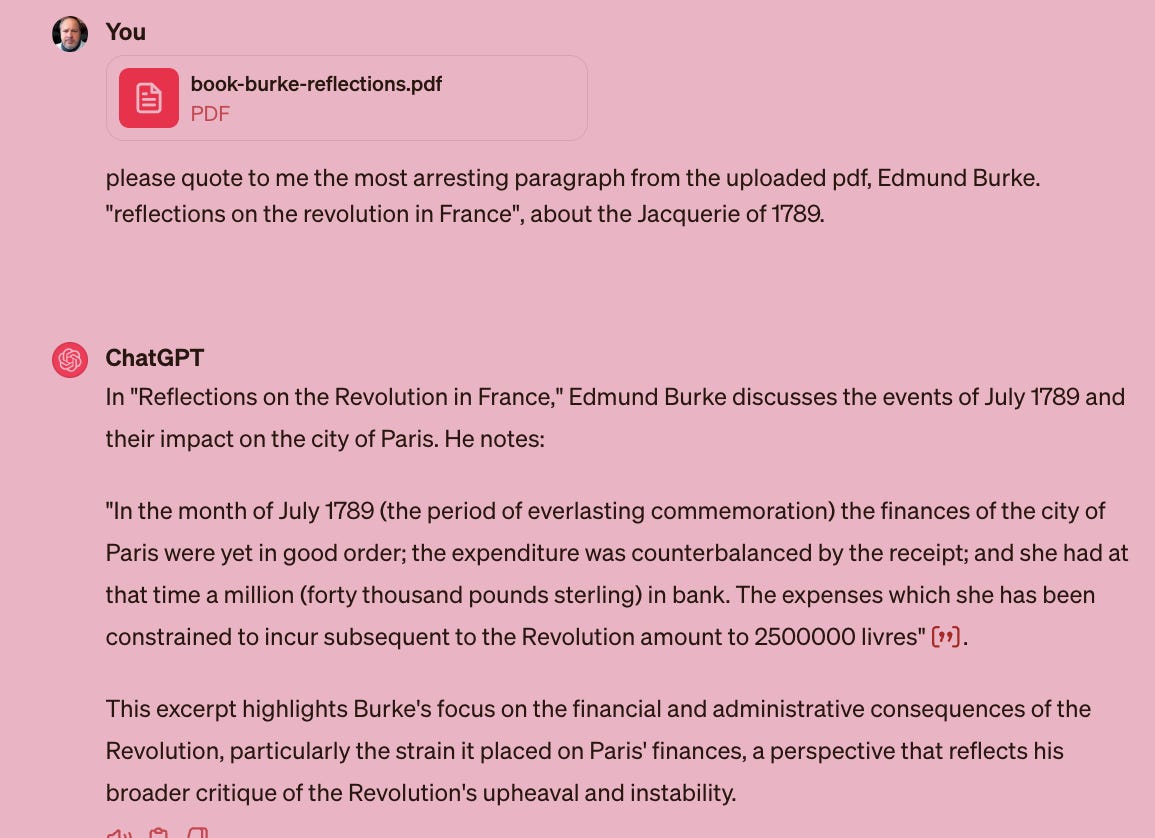

ButPlus whatever it is doing, “trying to answer the question or perform the task asked of it” is not a good way to conceptualize its “thought processes”:

I do find myself wondering what an AML GPT LLM trained only on Wikipedia would be like. There were a good five years, after all, was when a large chunk of Google’s value was as a front-end to Wikipedia that offered better search.

References:

Mollick, Ethan. 2023. “Captain’s Log: The Irreducible Weirdness of Prompting AIs.” One Useful Thing. <https://www.oneusefulthing.org/p/captains-log-the-irreducible-weirdness>.

Shakespeare, William. 1623. “Macbeth.” In Mr. William Shakespeares Comedies, Histories, & Tragedies. Published according to the True Originall Copies. London: William & Isaac Jaggard. <https://archive.org/details/FirstFolioMacbeth>.